Summary

This guide is for early childhood education services, schools and kura . It gives you guidance about how to effectively evaluate your own practices to improve learners’ success.

Our guide shows and explains the processes and reasoning you can use to do and use internal evaluation for improvement.

We published this guide as a resource

We wanted this information available as a resource to a range of groups in education. We published this guide to help both schools and kura and early childhood services and their communities.

What’s in the guide

The guide describes:

- what effective internal evaluation is

- what it involves

- how to evaluate in ways that will enhance outcomes for learners.

The guide is in these six sections:

- Introduction

- Engaging in effective internal evaluation

- Scope, depth and focus

- Processes and reasoning

- To think about and discuss

- Using processes and reasoning for improvement

Who can use the guide

A wide range of groups in education can use this guide. These groups could be:

- school boards of trustees

- management committees for early learning services

- leadership teams

- teachers and kaiako

- community members.

Community organisations interested in internal evaluation for improvement can also use this guide.

Other documents that support this guide

We based this guide on two publications about internal evaluation in schools. The first is about how to do and use internal evaluation. The second gives case studies of good practice in schools.

These publications are:

Effective School Evaluation: How to do and use internal evaluation for improvement. (2015)

Internal Evaluation: good practice. (2015)

Whole article:

Effective Internal Evaluation for ImprovementIntroduction

This overview of the processes and reasoning involved in effective internal evaluation for improvement draws on a recently published resource Effective Internal Evaluation – How to do and use internal evaluation for improvement (2015). This resource was jointly published by the Education Review Office and the Ministry of Education. Section Two of the resource – “Engaging in effective internal evaluation” describes both the ‘how to’ and the evaluative thinking that contributes to improvement. As these processes and associated reasoning can be used in many different evaluation contexts and settings we decided to publish this overview so more people could access and make use of this information. You might be a school board of trustees, the management committee of an early learning service, or a leader, teacher or member of a community of learning. This resource could also be used by community organisations interested in internal evaluation for improvement.

Engaging in effective internal evaluation

Evaluation is the engine that drives improvement and innovation. Internal evaluation is undertaken to assess what is and is not working, and for whom, and then to determine what changes are needed, particularly to advance equity and excellence goals. Internal evaluation involves asking good questions, gathering fit-for-purpose data and information, and then making sense of that information. Much more than a technical process, evaluation is deeply influenced by your values and those of your community. Effective internal evaluation is always driven by the motivation to improve.

When internal evaluation is done well, processes are coherent and align with your vision and strategic goals. You work collaboratively to ensure that the efforts that go into evaluation lead to improvement. The urgency to improve is shared, and can be articulated, by all.

Evidence from research shows that there are organisational conditions that support development of the capacity to do and use evaluation for improvement and innovation.

These include:

- evaluation leadership

- a learning-oriented community of professionals that demonstrates agency in using evaluation for improvement in practice and outcomes

- opportunity to develop technical evaluation expertise

(including access to external expertise) - access to, and use of, appropriate tools and methods

- systems, processes and resources that support purposeful data gathering, knowledge building and decision making.

Scope, depth and focus

Internal evaluations vary greatly in scope, depth and focus depending on the purpose and the context. An evaluation may be strategic, linked to vision, values, goals and targets; or it may be a business-as-usual review of, for example, policy or procedures. It could also be a response to an unforeseen (emergent) event or issue.

Figure 1 shows how these different purposes can all be viewed as part of a common improvement agenda.

Figure 1: Types of Internal Evaluation

The three types of evaluation (Strategic, Regular and Emergent) sit in an outer circle with Koru shaped circles, the koru growing larger and stronger as they move outwards from the centre. In the centre is Learners.

Strategic evaluation

Strategic evaluations focus on activities related to your vision, values and goals. They aim to find out to what extent your vision is being realised, goals achieved, and progress made. Strategic evaluations are a means of answering such key questions as: To what extent are all our learners experiencing success? To what extent are improvement initiatives making a difference for all learners? How can we do better? Because strategic evaluations delve into matters, they need to be in-depth and they take time.

Regular evaluation

Regular (planned) evaluations are business-as-usual evaluations or inquiries where data is gathered, progress towards goals is monitored, and the effectiveness of programmes or interventions is assessed. They ask: To what extent do our policies and practices promote the learning and wellbeing of all learners? How fully have we implemented the policies we have put in place to improve outcomes for all learners? How effective are our strategies for accelerating the progress of target learners? Business-as-usual evaluations vary in scope and depth and feed back into your strategic and annual plans.

Emergent evaluation

Emergent (or spontaneous) evaluations are a response to an unforeseen event or an issue picked up by routine scanning or monitoring. Possible focus questions include: What is happening? Who for? Is this okay? Should we be concerned? Why? Do we need to take a closer look? Emergent evaluations arise out of high levels of awareness about what is happening.

To think about and discuss

- What is the focus of our evaluations?

- Do we use all three types of evaluation (strategic, regular and emergent)?

- In what areas could we improve our understanding and practice of evaluation?

- How do we ensure that all evaluation supports our vision, values and strategic goals?

- How do we ensure that all evaluation promotes equitable outcomes for learners?

Figure 2: Learner-focused evaluation processes and reasoning

The processes form an outer circle, linked at each stage to each other and to learners at the centre. The cycle of processes starts with Noticing. It moves next to Investigating. This is followed by Collaborative sense making. From there to Prioritising to take action. The cycle concludes with Monitoring and evaluating impact. From there the cycle repeats. The central theme is stated as We can do better.

Processes and reasoning

Internal evaluation requires those involved to engage in deliberate, systematic processes and reasoning, with improved outcomes for all learners as the ultimate aim. Those involved collaborate to:

- investigate and scrutinise practice

- analyse data and use it to identify priorities for improvement

- monitor implementation of improvement actions and evaluate their impact

- generate timely information about progress towards goals and the impact of actions taken.

Figure 2 identifies five interconnected, learner-focused processes that are integral to effective evaluation for improvement.

Figures 3 to 7 unpack each of these processes in terms of the conditions that support their effectiveness, the reasoning involved, and the activities or actions involved.

Figure 3: Noticing

The processes form an outer circle, linked at each stage to each other and to learners at the centre. The cycle of processes starts with Noticing. It moves next to Investigating. This is followed by Collaborative sense making. From there to Prioritising to take action. The cycle concludes with Monitoring and evaluating impact. From there the cycle repeats. The central theme is stated as We can do better.

All parts are grey except for "Noticing" which is green, to link to the discussion about Noticing below the diagram.

When noticing

- We maintain a learner focus

- We are aware of what is happening for all learners

- We have an ‘inquiry habit of mind’

- We are open to scrutinising our data

- We see dissonance and discrepancy as opportunities for deeper inquiry

We ask ourselves

- What’s going on here?

- For which learners?

- Is this what we expected?

- Is this good?

- Should we be concerned? Why?

- What is the problem or issue?

- Do we need to take a closer look?

Noticing involves

- Scanning

- Being aware of hunches, gut reactions and anecdotes

- Knowing when to be deliberate and intentional

- Recognising the context and focus for evaluation – strategic, regular or emergent

“If the results don’t look good we need to be honest about them”

“Knowing what the problem is, is critical”

Figure 4: Investigating

The processes form an outer circle, linked at each stage to each other and to learners at the centre. The cycle of processes starts with Noticing. It moves next to Investigating. This is followed by Collaborative sense making. From there to Prioritising to take action. The cycle concludes with Monitoring and evaluating impact. From there the cycle repeats. The central theme is stated as We can do better.

All parts are grey except for "Investigating" which is pink, to link to the discussion about Investigating below the diagram.

When investigating

- We focus on what is happening for all our learners

- We ensure we have sufficient data to help us respond to our questions

- We check that our data is fit-for-purpose

- We actively seek learners and parents’ perspectives

- We check we know about all learners in different situations

We ask ourselves

- What do we already know about this?

- How do we know this?

- What do we need to find out?

- How might we do this?

- What ‘good questions’ should we ask?

- How will we gather relevant and useful data’?

Investigating involves

- Taking stock

- Bringing together what we already know (data/information)

- Using existing tools or developing new ones to gather data

- Identifying relevant sources of data/evidence

- Seeking different perspectives

“We make sure that we include the voices and perspectives of children and their whānau.”

Figure 5: Collaborative sense making

The processes form an outer circle, linked at each stage to each other and to learners at the centre. The cycle of processes starts with Noticing. It moves next to Investigating. This is followed by Collaborative sense making. From there to Prioritising to take action. The cycle concludes with Monitoring and evaluating impact. From there the cycle repeats. The central theme is stated as We can do better.

All parts are grey except for "Collaborative sense making" which is blue, to link to the discussion about Collaborative sense making below the diagram.

When making sense of our data and information

- We ensure we have the necessary capability (data literacy) and capacity (people/time)

- We are open to new learning

- We know ‘what is so’ and have determined ‘so what’

- We know what ‘good’ looks like so that we can recognise our strengths and areas for improvement

- We have a robust evidence base to inform our decision making and prioritising

We ask ourselves

- What is our data telling us/what insights does it provide?

- Is this good enough?

- How do we feel about what we have found?

- Do we have different interpretations of the data? If so, why?

- What might we need to explore further?

- What can we learn from research evidence about what ‘good’ looks like?

- How close are we to that?

Collaborative sense making involves

- Scrutinising our data with an open mind

- Working with different kinds of data, both quantitative and qualitative

- Drawing on research evidence and using suitable frameworks or indicators when analysing and making sense of our data

“We want to know what’s good – and what’s not good enough”

“To effect change, teachers needed to be on board – it was not going to be a two-meeting process”

“What are we doing well? What can we improve on? How can we enrich and accelerate the learning of our learners?”

Figure 6: Prioritising to take action

The processes form an outer circle, linked at each stage to each other and to learners at the centre. The cycle of processes starts with Noticing. It moves next to Investigating. This is followed by Collaborative sense making. From there to Prioritising to take action. The cycle concludes with Monitoring and evaluating impact. From there the cycle repeats. The central theme is stated as We can do better.

All parts are grey except for "Prioritising to take action" which is orange, to link to the discussion about Prioritising to take action below the diagram.

When prioritising to take action

- We are clear what problem or issue we are trying to solve

- We understand what we need to improve

- We are determined to achieve equitable progress and outcomes for all learners

- We are clear about the actions we need to take and why

- We have the resources necessary to take action

- We have a plan that sets out clear expectations for change

We ask ourselves

- What do we need to do and why?

- What are our options?

- Have we faced this situation before?

- What can we do to ensure better progress and outcomes for more of our learners?

- How big is the change we have in mind?

- Can we get the outcomes we want within the timeframe we have specified?

- What strengths do we have to draw on/build on?

- What support/resources might we need?

Prioritising to take action involves

- Considering possible options in light of the ‘what works’ evidence

- Being clear about what needs to change and what doesn’t

- Identifying where we have the capability and capacity to improve

- Identifying what external expertise we might need

- Prioritising our resources to achieve equitable outcomes

“Prioritising is based on having capacity – you can’t stretch yourself too far”

“Everything we have done has been based on the evidence”

“We are about wise owls not bandwagons”

“Everything we’ve done has been decided with data, both quantitative and qualitative”

“If we keep doing the same things we will keep getting the same results”

Figure 7: Monitoring and evaluation impact

The processes form an outer circle, linked at each stage to each other and to learners at the centre. The cycle of processes starts with Noticing. It moves next to Investigating. This is followed by Collaborative sense making. From there to Prioritising to take action. The cycle concludes with Monitoring and evaluating impact. From there the cycle repeats. The central theme is stated as We can do better.

All parts are grey except for "Monitoring and evaluating impact" which is dark grey, to link to the discussion about Monitoring and evaluating impact below the diagram.

When monitoring and evaluating

- We know what we are aiming to achieve

- We are clear about how we will monitor progress

- We know how we will recognise progress

- We are focused on ensuring all learners have equitable opportunities to learn

- We have the capability and capacity to evaluate the impact of our actions

We ask ourselves

- What is happening as a result of our improvement actions?

- What evidence do we have?

- Which of our learners are/are not benefiting?

- How do we know?

- Is this good enough?

- Do we need to adjust what we are doing?

- What are we learning?

- Can we use this learning in other areas?

Monitoring and evaluating involves

- Keeping an eye on the data for evidence of what is/is not working for all learners

- Having systems, processes and tools in place to track progress and impact

- Developing progress markers that will help us to know whether we are on the right track

- Checking in with learners and their parents and whänau

- Knowing when to adjust or change actions or strategies

“Success is still fragile – if you have a group (that is not achieving to expectations) you focus on them and keep focusing on them”

Why not start a discussion about what each of the five evaluation processes might mean in your own organisation? This will clarify your thinking about evaluation and evaluation practices and help identify areas where you need to develop greater capability or capacity.

To think about and discuss

- What processes do we currently use for the purposes of evaluation and review?

- How do our processes reflect those described above?

- Which parts of these processes do we do well? How do we know?

- How might we use the processes described above to improve the quality and effectiveness of our evaluation practice?

Effective evaluation requires us to think deeply about the data and information we gather and what it means in terms of priorities for action. By asking the right questions of ourselves, we will keep the focus on our learners, particularly those for whom current practice is not working. The twin imperatives of excellence and equitable outcomes should always be front and centre whatever it is that we are evaluating.

While every community’s improvement is unique, it can be described under these four headings:

- Context for improvement

- Improvement actions taken

- Shifts in practice

- Outcomes for learners.

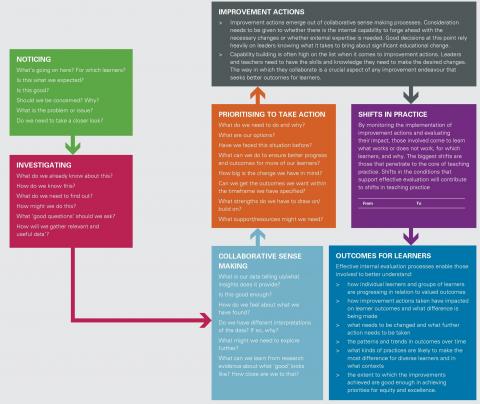

The framework on the following page shows how the evaluation and reasoning processes are integral to, and an embedded part of, ongoing improvement for equity and excellence.

Using evaluation processes and reasoning for improvement

Every context is different. It may be that the appointment of a new leader provides the catalyst for change. It may be that external evaluators, by posing ‘stop and think’ questions, motivate leaders and teachers to evaluate aspects of practice to improve outcomes. Whatever the context, engaging in evaluation for improvement is motivated by the need to make changes that will have a positive impact on the learning and wellbeing of all learners. Such change is sustained by the belief that we can do better.

This flowchart illustrates Using evaluation processes and reasoning for improvement. It sets out the stages in different coloured boxes, each box linked to the next with an arrow.

The first box is Noticing. Questions for Noticing are: What’s going on here? For which learners? Is this what we expected? Is this good? Should we be concerned? Why? What is the problem or issue? Do we need to take a closer look?

This leads to the second box, Investigating. Questions for Investigating are: What do we already know about this? How do we know this? What do we need to find out? How might we do this? What ‘good questions’ should we ask? How will we gather relevant and useful data’?

This leads to Collaborative sense making in the third box. Questions for Collaborative sense making are: What is our data telling us/what insights does it provide? Is this good enough? How do we feel about what we have found? Do we have different interpretations of the data? If so, why? What might we need to explore further? What can we learn from research evidence about what ‘good’ looks like? How close are we to that?

This leads to the fourth box, Prioritising to take action. Questions for Prioritising to take action are: What do we need to do and why? What are our options? Have we faced this situation before? What can we do to ensure better progress and outcomes for more of our learners? How big is the change we have in mind? Can we get the outcomes we want within the timeframe we have specified? What strengths do we have to draw on/build on? What support/resources might we need?

The fifth box discusses Improvement actions. There are two sections in the discussion.

- Improvement actions emerge out of collaborative sense making processes. Consideration needs to be given to whether there is the internal capability to forge ahead with the necessary changes or whether external expertise is needed. Good decisions at this point rely heavily on leaders knowing what it takes to bring about significant educational change.

- Capability building is often high on the list when it comes to improvement actions. Leaders and teachers need to have the skills and knowledge they need to make the desired changes. The way in which they collaborate is a crucial aspect of any improvement endeavour that seeks better outcomes for learners.

Improvement actions lead to Shifts in practice in the sixth box. Shifts in practice are explained as follows. By monitoring the implementation of improvement actions and evaluating their impact, those involved come to learn what works or does not work, for which learners, and why. The biggest shifts are those that penetrate to the core of teaching practice. Shifts in the conditions that support effective evaluation will contribute to shifts in teaching practice. This means shifts From an existing practice, To a changed and improved practice.

This leads to the seventh and final box, Outcomes for learners, to complete the flow chart.

This explains that: Effective internal evaluation processes enable those involved to better understand:

- how individual learners and groups of learners are progressing in relation to valued outcomes

- how improvement actions taken have impacted on learner outcomes and what difference is

being made

- what needs to be changed and what further action needs to be taken

- the patterns and trends in outcomes over time

- what kinds of practices are likely to make the most difference for diverse learners and in what contexts

- the extent to which the improvements achieved are good enough in achieving priorities for equity and excellence.

Publication Information and Copyright

Effective Internal Evaluation for improvement

Published 2016

© Crown copyright

ISBN 978-0-478-43849-9

Except for the Education Review Office logo, this copyright work is licensed under creative commons attribution 3.0 New Zealand licence. In essence, you are free to copy, distribute and adapt the work, as long as you attribute the work to the Education Review office and abide by the other licence terms. In your attribution, use the wording ‘Education Review Office’, not the Education Review Office logo or the New Zealand Government logo.